Google Updates Robots.txt Parser on GitHub: What You Need to Know!

In the world of website management and search engine optimization (SEO), robots.txt files play a crucial role. These files tell search engine crawlers which pages or files they can or cannot request from your site. Recently, Google made significant updates to its robots.txt parser on GitHub, which can have important implications for website owners and SEO professionals. Let’s break down what these updates mean and how they might impact you.

What is a robots.txt file?

Think of a robots.txt file as a set of instructions for search engine bots. It tells them where they’re allowed to go on your site and what they’re allowed to look at. This file is located in the root directory of your website and is one of the first things crawlers check when they visit your site.

Why are robots.txt files important?

Robots.txt files help you control how search engines index your site. For example, you might use them to prevent certain pages from appearing in search results, like private admin pages or duplicate content. They also help conserve your site’s crawl budget by guiding bots to the most important pages.

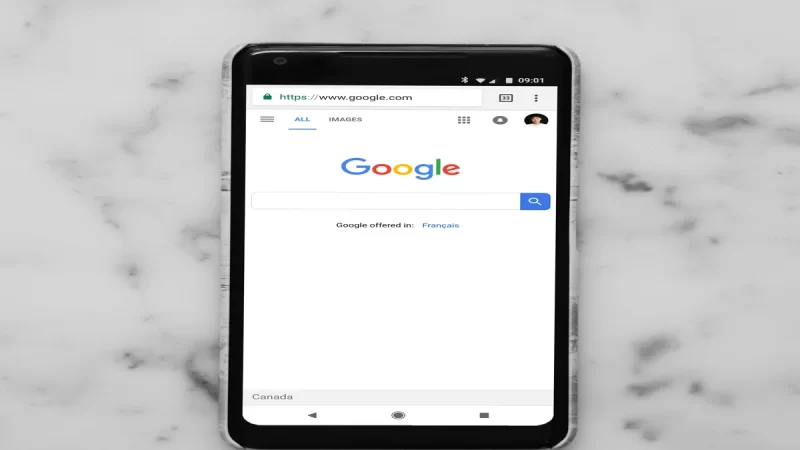

What are the recent updates to Google’s robots.txt parser?

Google recently updated its robots.txt parser on GitHub, the platform where it shares its open-source projects. These updates include improvements to how the parser interprets robots.txt files, making it more efficient and accurate.

Implications of the updates:

- Improved crawl efficiency: With a more accurate parser, Google can better understand which parts of your site are off-limits to crawlers. This means they won’t waste time and resources trying to access pages you don’t want indexed.

- Better indexing control: The updates may give website owners more precise control over how their content appears in search results. By accurately specifying which pages should be crawled and indexed, you can ensure that only your best and most relevant content is visible to users.

- Potential for SEO impact: While Google hasn’t explicitly stated how these updates will affect search rankings, any improvements to how it reads and understands robots.txt files could indirectly influence SEO. Ensuring your robots.txt file is correctly configured can help search engines prioritize your most important pages.

- Transparency and collaboration: By sharing these updates on GitHub, Google is promoting transparency and collaboration within the SEO community. Developers and website owners can review the changes and provide feedback, ultimately leading to a better web ecosystem for everyone.

What should website owners do?

If you’re a website owner or SEO professional, it’s essential to review and update your robots.txt file regularly. Make sure it accurately reflects your site’s structure and your intentions for search engine indexing. Keep an eye on Google’s announcements and updates to stay informed about any changes to their crawling and indexing processes.

Google’s recent updates to its robots.txt parser on GitHub are a positive development for website owners and SEO practitioners. By improving the accuracy and efficiency of how it interprets robots.txt files, Google is helping to ensure a better experience for both users and website administrators. Stay proactive in managing your robots.txt file to make the most of these updates and maintain control over your site’s visibility in search results.

FAQs:

A robots.txt file is a text file located in the root directory of a website that tells search engine crawlers which pages or files they can or cannot request from the site. It’s important for SEO because it helps control how search engines index and display your site’s content in search results.

Google recently updated its robots.txt parser on GitHub, where it shares its open-source projects. These updates include improvements to how the parser interprets robots.txt files, making it more efficient and accurate in understanding website owners’ intentions regarding search engine indexing.

The updates to Google’s robots.txt parser can have several implications for website owners. These include improved crawl efficiency, better indexing control, potential SEO impact, and enhanced transparency and collaboration within the SEO community.

A more accurate robots.txt parser helps search engines like Google understand which parts of a website are off-limits to crawlers, leading to improved crawl efficiency and better resource allocation. It also gives website owners more precise control over how their content appears in search results, potentially influencing SEO.

Website owners can ensure their robots.txt file is correctly configured by regularly reviewing and updating it to accurately reflect their site’s structure and their intentions for search engine indexing. It’s essential to stay informed about Google’s announcements and updates to understand any changes to their crawling and indexing processes.